Han Hu, Zhimin Xu, Xinggong Zhang, Zongming Guo*, "Optimal Viewport-Adaptive 360-degree Video Streaming against Random Head Movement", Proc. of the International Conference on Communications (ICC'2019), 20-24 May 2019, Shanghai, China.

Abstract:

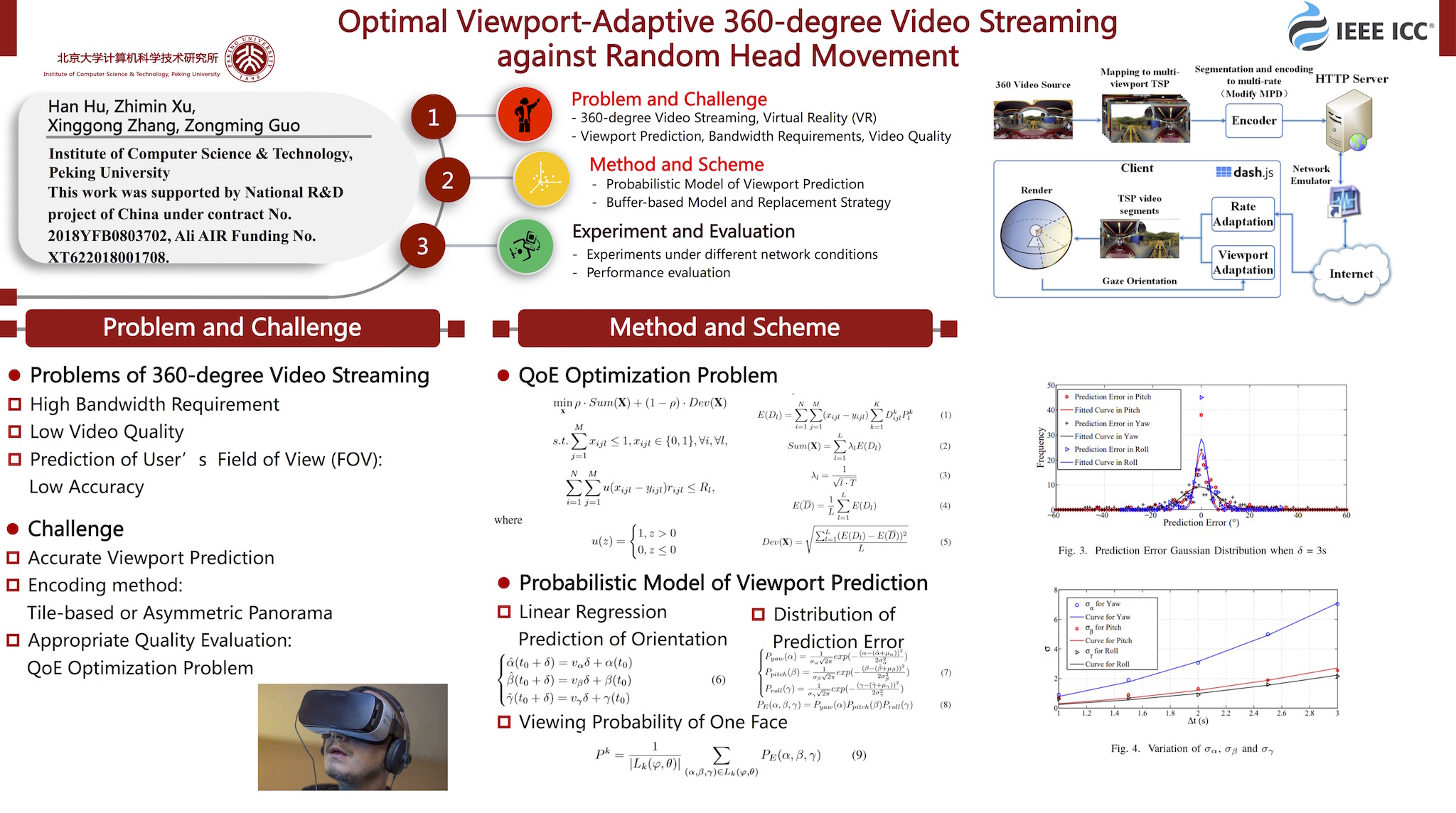

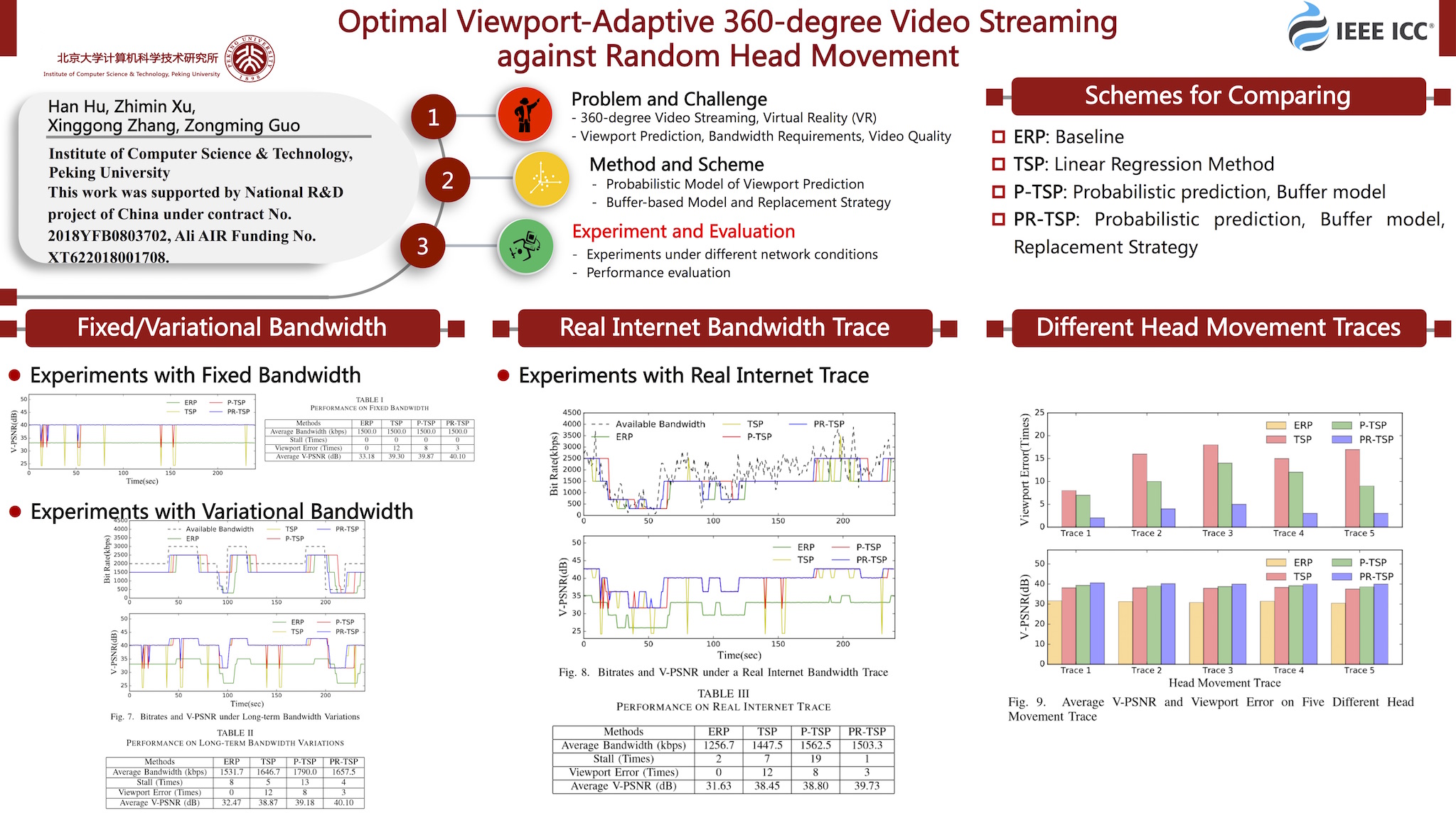

Recently, a significant interest in 360-degree virtual reality (VR) video has been formed. However, a key problem is how to design a robust adaptive streaming approach and implement a practical system. The traditional streaming methods which are not sensitive to user’s viewport could cause huge bandwidth budget with low video quality, while the viewport adaptive schemes may be not accurate enough especially under random head movement.

In this paper, we have designed an optimal viewport-adaptive 360-degree video streaming scheme, which is to maximize Quality of Experience (QoE) by predicting user’s viewport with a probabilistic model, prefetching video segments into the buffer and replacing some unbefitting segments. In this way, continuous and smooth playback, high bandwidth utilization, low viewport prediction error and high peak signal-to-noise ratio in the viewport (V-PSNR) can be obtained. In order to deal with user’s head movement, we have reduced prediction error rate by employing a probabilistic viewport prediction model, and a replacement strategy has been applied to update the downloaded segments when the user’s viewport suddenly changes. To reduce smoothness loss, the segments’ oscillation during playback has been considered. We also developed a prototype system with our method. The well-designed experiments provided numerous results which proved the better performance of our scheme.