Adaptive Batch Normalization for Practical Domain Adaptation

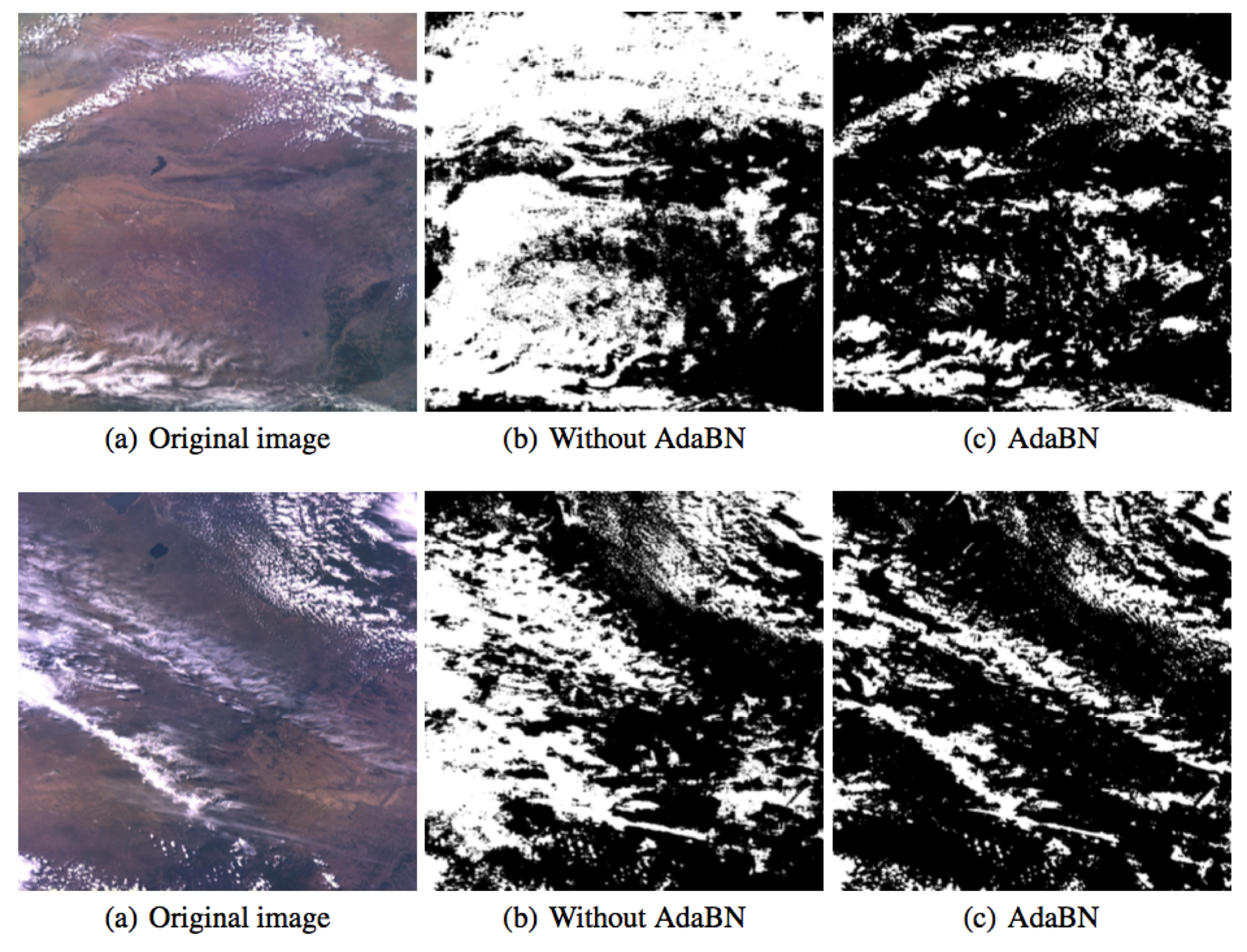

Fig.1 A practical application of visual cloud detection for remote sensing images.

Abstract

Deep neural networks (DNN) have shown unprecedented success in various computer vision applications such as image classification and object detection. However, it is still a common annoyance during the training phase, that one has to prepare at least thousands of labeled images to fine-tune a network to a specific domain. Recent study (Tommasi et al., 2015) shows that a DNN has strong dependency towards the training dataset, and the learned features cannot be easily transferred to a different but relevant task without fine-tuning. In this paper, we propose a simple yet powerful remedy, called Adaptive Batch Normalization (AdaBN) to increase the generalization ability of a DNN. By modulating the statistics from the source domain to the target domain in all Batch Normalization layers across the network, our ap- proach achieves deep adaptation effect for domain adaptation tasks. In contrary to other deep learning domain adaptation methods, our method does not require additional components, and is parameter-free. It archives state-of-the-art performance despite its surprising simplicity. Furthermore, we demonstrate that our method is complementary with other existing methods. Combining AdaBN with existing domain adaptation treatments may further improve model performance.

Recourses

Citation

@article{li2018adaptive, title={Adaptive Batch Normalization for practical domain adaptation}, author={Li, Yanghao and Wang, Naiyan and Shi, Jianping and Hou, Xiaodi and Liu, Jiaying}, journal={Pattern Recognition}, volume={80}, pages={109--117}, year={2018}, publisher={Elsevier} }

@inproceedings{li2017revisiting, title={Revisiting Batch Normalization For Practical Domain Adaptation}, author={Li, Yanghao and Wang, Naiyan and Shi, Jianping and Liu, Jiaying and Hou, Xiaodi}, booktitle={International Conference on Learning Representations Workshop}, year={2017} }

Results

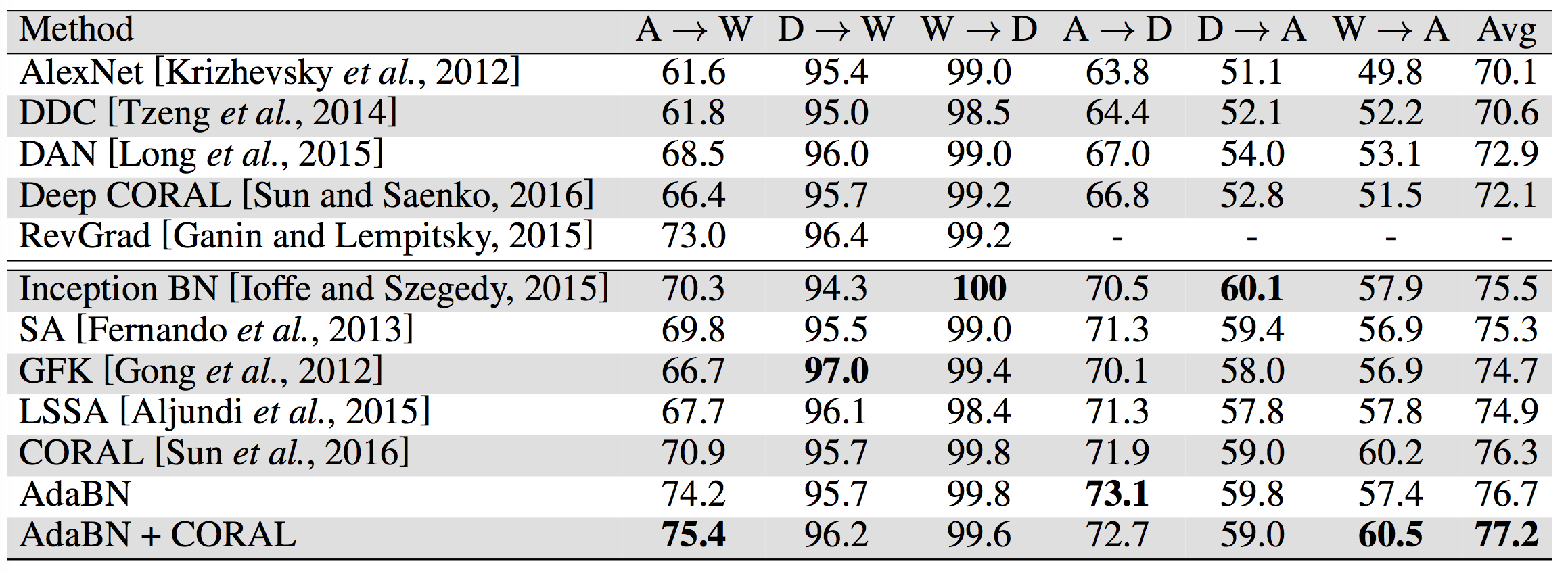

Table.1 Single source domain adaptation results on Office-31 dataset with standard unsupervised adaptation protocol.

Table 2: Multi-source domain adaptation results on Office-31 dataset with standard unsupervised adaptation protocol.