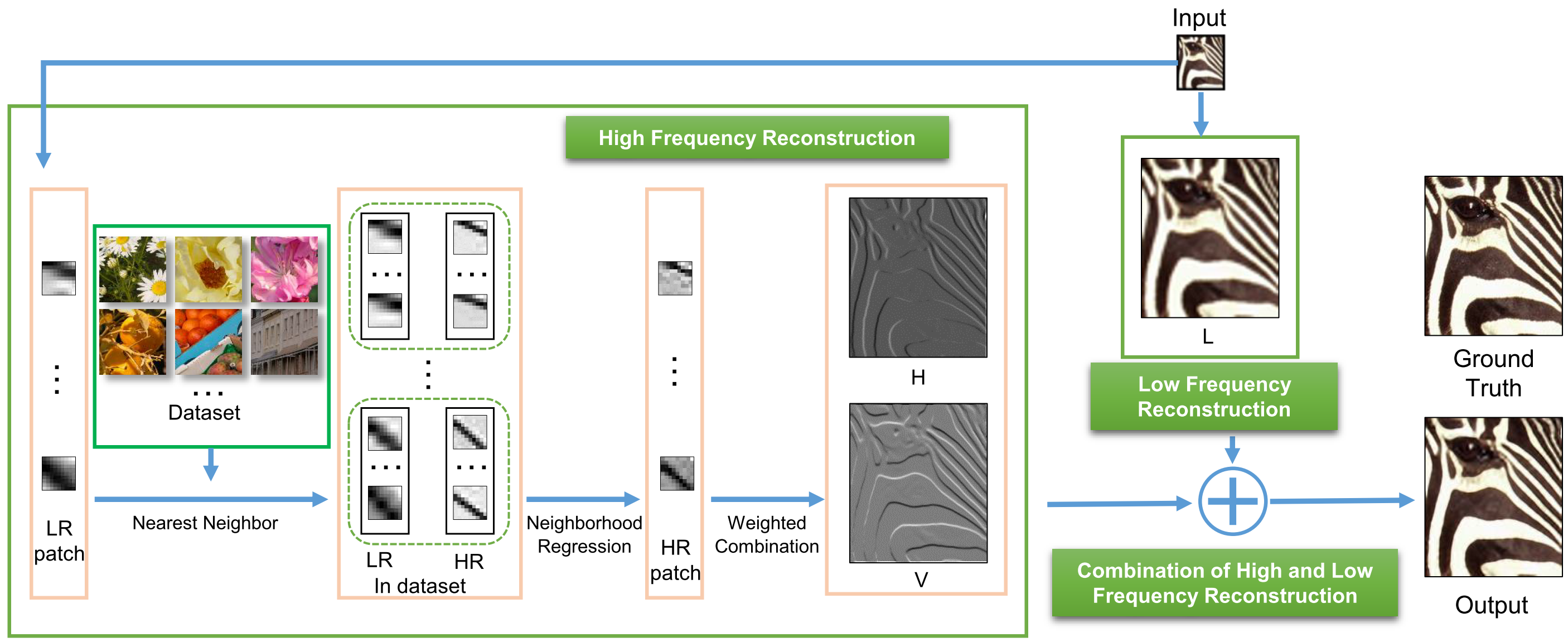

Fig.1 An overview of the proposed method. The input LR image is reconstructed to an HR image by low- and high-frequency reconstruction parts and the final combination part. H and V are the reconstructed horizontal and vertical gradient images, and L is the reconstructed low-frequency component. Note that the patches in high-frequency reconstruction part are all in edge maps, which represent high-frequency details.

Abstract

There have been many proposed works on image super-resolution via employing different priors or external databases to enhance HR results. However, most of them do not work well on the reconstruction of high-frequency details of images, which are more sensitive for human vision system. Rather than reconstructing the whole components in the image directly, we propose a novel edge-preserving super-resolution algorithm, which reconstructs low- and high-frequency components separately. In this paper, a Neighborhood Regression method is proposed to reconstruct high-frequency details on edge maps, and low-frequency part is reconstructed by the traditional bicubic method. Then, we perform an iterative combination method to obtain the estimated high resolution result, based on an energy minimization function which contains both low-frequency consistency and high-frequency adaptation. Extensive experiments evaluate the effectiveness and performance of our algorithm. It shows that our method is competitive or even better than the state-of-art methods.

Experimental results

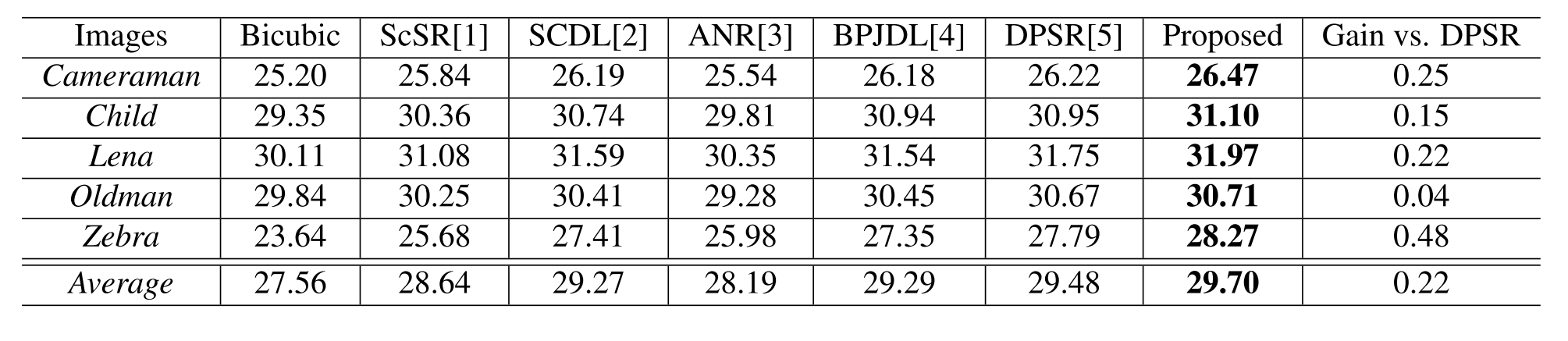

Table 1. PSNR(dB) results on image super-resolution (scaling factor = 3)

Some results of test images.

1. Lena

(a) Bicubic |

(b) ScSR[1] |

(c) SCDL[2] |

(d) ANR[3] |

(e) BPJDL[4] |

(f) DPSR[5] |

(g) Proposed |

(h) Ground Truth |

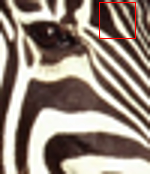

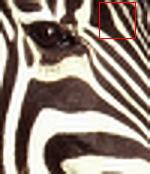

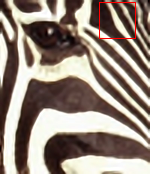

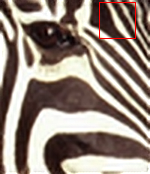

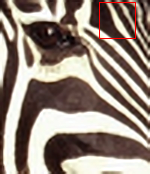

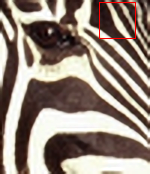

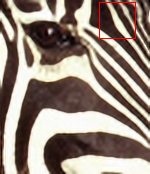

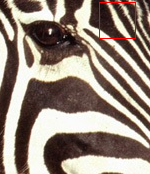

2. Zebra

(a) Bicubic |

(b) ScSR[1] |

(c) SCDL[2] |

(d) ANR[3] |

(e) BPJDL[4] |

(f) DPSR[5] |

(g) Proposed |

(h) Ground Truth |

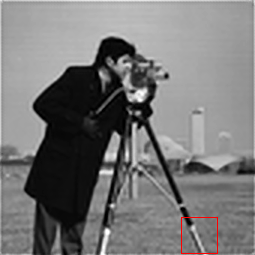

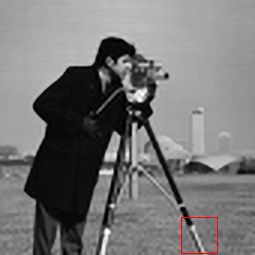

3. Cameraman

(a) Bicubic |

(b) ScSR[1] |

(c) SCDL[2] |

(d) ANR[3] |

(e) BPJDL[4] |

(f) DPSR[5] |

(g) Proposed |

(h) Ground Truth |

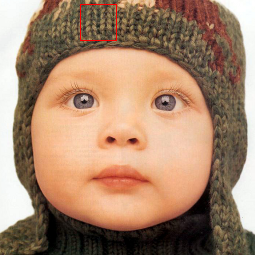

4. Child

(a) Bicubic |

(b) ScSR[1] |

(c) SCDL[2] |

(d) ANR[3] |

(e) BPJDL[4] |

(f) DPSR[5] |

(g) Proposed |

(h) Ground Truth |

Reference

[1] Jianchao Yang, John Wright, Thomas Huang, and Y- i Ma, “Image super-resolution via sparse representa- tion,” IEEE Transactions on Image Processing, 2010.

[2] Shenlong Wang, Lei Zhang, Yan Liang, and Quan Pan, “Semi-coupled dictionary learning with applications to image super-resolution and photo-sketch synthesis,” in Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition, 2012.

[3] Radu Timofte, Vincent De Smet, and Luc Van Gool, “Anchored neighborhood regression for fast example- based super-resolution,” in Proc. IEEE Int’l Conf. Com- puter Vision, 2013.

[4] Li He, Hairong Qi, and Russell Zaretzki, “Beta pro- cess joint dictionary learning for coupled feature spaces with application to single image super-resolution,” in Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition, 2013.

[5] Yu Zhu, Yanning Zhang, and Alan L. Yuille, “Sin- gle image super-resolution using deformable patches,” in Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition, 2014.