- Jie Ren

renjie@pku.edu.cn - Yue Zhuo

zyue1105@mail.bnu.edu.cn - Jiaying Liu

liujiaying@pku.edu.cn - Zongming Guo

guozongming@pku.edu.cn

Publication Bibtex

@Inproceedings{RZL+2012,

author = {Jie Ren, and Yue Zhuo, and Jiaying Liu, and Zongming Guo},

title = {Illumination-Invariant Non-Local Means Based Video Denoising},

booktitle = {Image Processing (ICIP), 2012 19th IEEE International Conference

on},

year = {2012} }

Published on ICIP, September 2012.

Introduction

Image and video denoising is a long-standing research area in the image processing community. It aims to recover the high-quality clean image (sequence) from its noised version, which may be taken, for example, by a low-end imaging devices and/or under limited conditions. A key of image and video denoising is to exploit the prior information. For video denoising, which is the main focus of this paper, the temporal coherence of image sequence is an important ingredient of designing an efficient video denoising algorithm. To explore the temporal information in video denoising, motion compensated image sequence filters are proposed. However, motion estimation is a difficult problem mainly because the aperture problem on textureless regions. Filtering along the inaccurate trajectories can lead to blur and information loss.

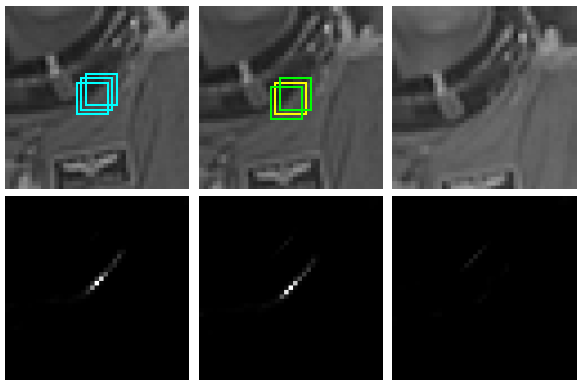

Instead of relying on a robust and reliable motion estimation, the Non-Local Means (NLM) filtering was proposed by Buades et al.[1]. The idea of NLM is that the patches that have similar structure patterns can be spatially far from each other, and thus one can collect them in the whole image. In the original NLM video denoising framework and its variants, it is implicitly assumed that the ``intrinsic'' similar local structures in the image sequence have the coherent illumination condition. Therefore, intensities of image patch will not have significant change and can be directly utilized as the feature vector of local structure. However, it often occurs that the video scene has illumination changes during the capture process, e.g., the flashlight effect. As shown in Fig.1, when the neighboring frame has illumination condition changes, the patch matching may miss the ``intrinsic'' structural similar patches although they are very visually similar.

Fig. 1. Non-local patch searching in a space-temporal volume consisting of search windows located in three adjacent frames, in which the rightmost frame has an illumination change due to the flashlight. The color boxes are similar patches to the current patch (yellow box) found by the non-local search. The second row shows the weight distribution of each temporal slice of the 3D space-temporal volume.

Therefore, we argue that NLM-based video denoising, especially when illumination changes are taken into account, indeed needs special handling to make it more robust. We use the NLM as the backbone of our system and propose several possible techniques to address the illumination invariance issue. Finally, by further analyzing and comparing these techniques, the histogram processing based technique is integrated into the NLM framework due to its robustness to the noise, scene changes and illumination changes.

Multi-frame NLM vs. Single-frame NLM

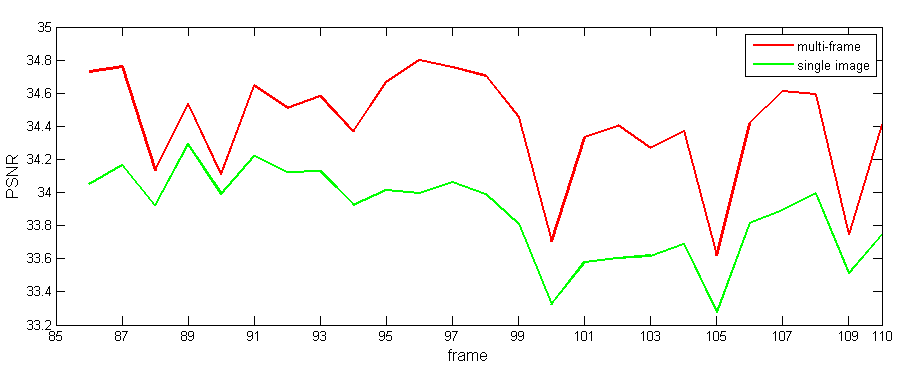

In [1], the NLM-based image denoising algorithm was extended to video denoising by aggregating patches in a space-temporal volume, which avoids the explicit motion estimation. Due to the temporal coherence of image sequence, more similar patches can be found than just searching within the current single frame. As shown in Fig.2, the performance of multi-frame NLM-based denoising performance (using the three adjacent frames) is much better than the performance of single-frame NLM-based denoising.

However, when the adjacent frames are having different illumination conditions, like the flashlight, the redundancy of similar patches founded by the original NLM-based method is suddenly reduced, as the example illustrated in Fig.1. It motivates us to further improve the NLM-based video denoising by making the similar patches searching much more robust to the illumination changes.

Fig. 2. PSNR curves of the multi-frame and single-frame NLM-based denoising methods on Crew sequence.

Illumination-Invariant Improvements Strategy

Based on the NLM-based framework, one can have three process scales: patch scale, search window scale, and frame scale. The patch scale has less pixels and therefore is more sensitive to the noise, while the frame scale captures the scene structures but may not be adaptive to the local illumination changes. The search window scale is a good tradeoff between the patch scale and frame scale.

Let S(i,t) be the search window centered at the pixel i in tth frame. S(i,t+k) denotes the search window in the kth adjacent frame. We define the filter F which processes S(i,t+k) to make it have a similar visual appearance to S(i,t).

For convenience, let  denote the minimum, maximum, and average intensity level of S(i,t).

denote the minimum, maximum, and average intensity level of S(i,t).

We proposed three improvement strategies to implement the filter F:

(a). Direct linear mapping function (i.e., contrast-stretching transformation),  ;

;

;

;

.

.

(c). Histogram specification processing,  .

.

where  and

and  are the probability density functions corresponding to intensity level r and z.

are the probability density functions corresponding to intensity level r and z.

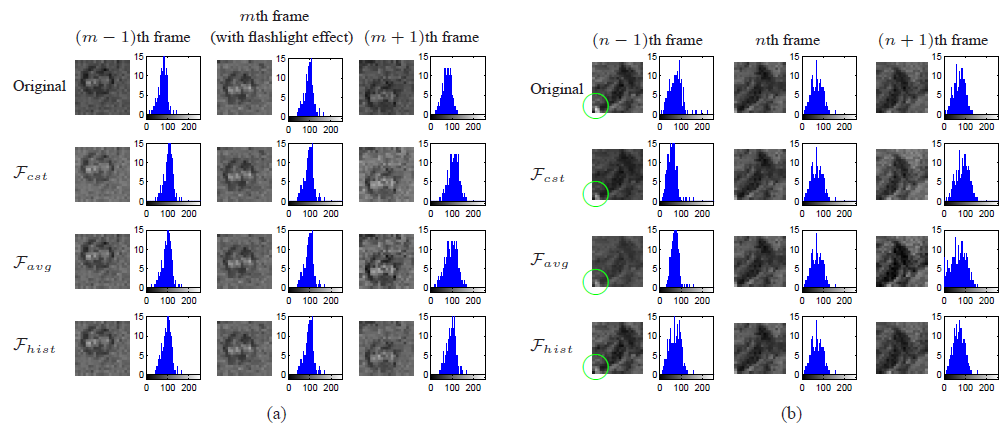

We verify the three strategies in different scenarios in Fig.3.

Fig. 3. Comparison of the filtering effects by three filtering methods. (a) search windows in three adjacent frames with illumination condition changes in the middle frame; (b) search windows in three adjacent frames without illumination changes.

In Fig.3(a), illumination change occurs at the $m$th frame caused by flashlight.

The linear mapping function  can adjust the local contrast, but can not guarantee to keep the same average intensity of the filtered image. Although

can adjust the local contrast, but can not guarantee to keep the same average intensity of the filtered image. Although  addresses this problem, both of

addresses this problem, both of  and

and  are not robust to the local scene changes, e.g., in Fig.3(b), the bright pixels (within green circles) appear in the (n-1)th frame but disappear in the nth and (n+1)th frames. In this situation,

are not robust to the local scene changes, e.g., in Fig.3(b), the bright pixels (within green circles) appear in the (n-1)th frame but disappear in the nth and (n+1)th frames. In this situation,  and

and  produce unsatisfactory filtering results as the majority of pixel intensities are scaled into low contrast due to the existence of a few bright pixel intensities.

produce unsatisfactory filtering results as the majority of pixel intensities are scaled into low contrast due to the existence of a few bright pixel intensities.

In both cases,  can produce plausible visual effects and fulfill the above requirements. Therefore, we select

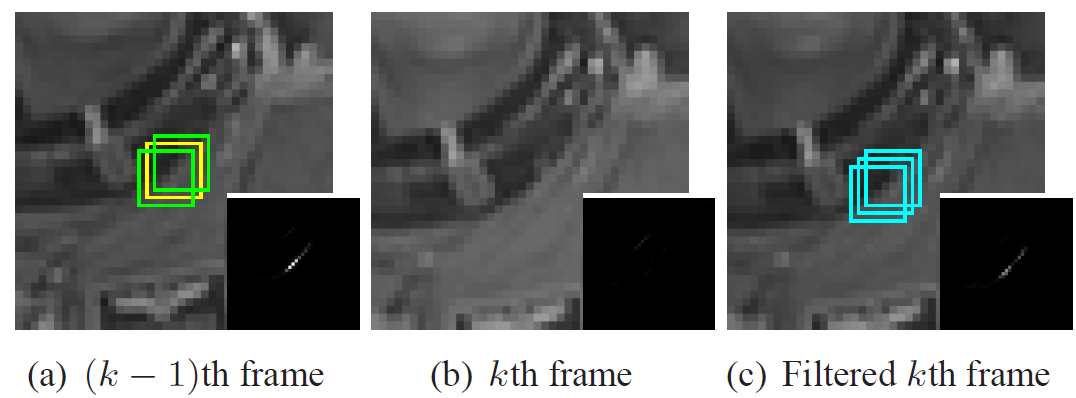

can produce plausible visual effects and fulfill the above requirements. Therefore, we select  as the core block of our proposed denoising system. A processing example is shown in Fig.4, comparing the non-local search results before and after the histogram processing on the kth frame with flashlight effect.

as the core block of our proposed denoising system. A processing example is shown in Fig.4, comparing the non-local search results before and after the histogram processing on the kth frame with flashlight effect.

Fig. 4. Comparison of non-local patch searching before and after filtering of the kth frame with flashlight.

Experimental results

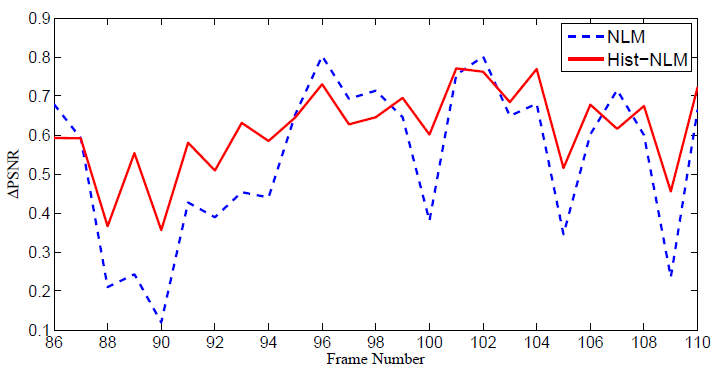

Fig. 5. The PSNR gains of the proposed method (Hist-NLM) and the multi-frame NLM-based method over the single-frame NLM-based method on Crew sequence frame by frame. ( =10)

=10)

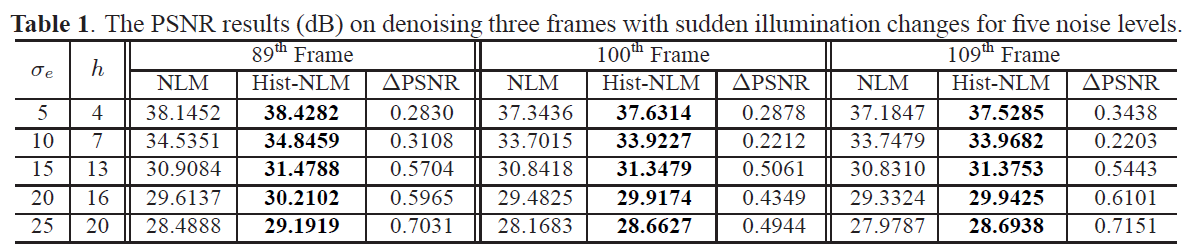

Synthetic noise

|

|

| Original | Noisy(σe=10) |

|

|

| Non-Local Means | Hist-NLM |

For visual quality comparisons, under different noise levels,

| σe=5 | σe=10 | σe=15 | σe=20 | σe=25 |

| 88th | 89th (no flashlight) | 90th |

|

|

|

| Original 89th frame | Noisy σe=5 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=38.1452dB |

PSNR=38.4282dB |

| 99th | 100th (with flashlight) | 101th |

|

|

|

| Original 100th frame | Noisy σe=5 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=37.3436dB |

PSNR=37.6314dB |

| 108th | 109th (with flashlight) | 110th |

|

|

|

| Original 109th frame | Noisy σe=5 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=37.1847dB |

PSNR=37.5285dB |

| 88th | 89th (no flashlight) | 90th |

|

|

|

| Original 89th frame | Noisy σe=10 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=34.5351dB |

PSNR=34.8459dB |

| 99th | 100th (with flashlight) | 101th |

|

|

|

| Original 100th frame | Noisy σe=10 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=33.7015dB |

PSNR=33.9227dB |

| 108th | 109th (with flashlight) | 110th |

|

|

|

| Original 109th frame | Noisy σe=10 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=33.7479dB |

PSNR=33.9682dB |

| 88th | 89th (no flashlight) | 90th |

|

|

|

| Original 89th frame | Noisy σe=15 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=30.9084dB |

PSNR=31.4788dB |

| 99th | 100th (with flashlight) | 101th |

|

|

|

| Original 100th frame | Noisy σe=15 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=30.8418dB |

PSNR=31.3479dB |

| 108th | 109th (with flashlight) | 110th |

|

|

|

| Original 109th frame | Noisy σe=15 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=30.831dB |

PSNR=31.3753dB |

| 88th | 89th (no flashlight) | 90th |

|

|

|

| Original 89th frame | Noisy σe=20 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=29.6137dB |

PSNR=30.2102dB |

| 99th | 100th (with flashlight) | 101th |

|

|

|

| Original 100th frame | Noisy σe=20 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=29.4825dB |

PSNR=29.9174dB |

| 108th | 109th (with flashlight) | 110th |

|

|

|

| Original 109th frame | Noisy σe=20 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=29.3324dB |

PSNR=29.9425dB |

| 88th | 89th (no flashlight) | 90th |

|

|

|

| Original 89th frame | Noisy σe=25 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=28.4888dB |

PSNR=29.1919dB |

| 99th | 100th (with flashlight) | 101th |

|

|

|

| Original 100th frame | Noisy σe=25 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=28.1683dB |

PSNR=28.6627dB |

| 108th | 109th (with flashlight) | 110th |

|

|

|

| Original 109th frame | Noisy σe=25 |

|---|---|

|

|

| NLM denoised | Hist-NLM denoised |

PSNR=27.9787dB |

PSNR=28.6938dB |

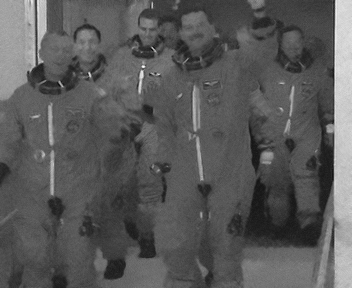

Real noisy video "Indoor" captured by Canon PowerShot-A570 under low light condition with flashlight effect.

| Real Noisy frame | NLM Denoised | Hist-NLM denoised |

|---|---|---|

|

|

|

|

|

|

References

[1] A. Buades, B. Coll, and J.M. Morel, “Denoising image sequences does not require motion estimation,” in AVSS 2005. IEEE Conference on Advanced Video and Signal Based Surveillance, Sept. 2005, pp. 70 – 74.