- Mengyan Wang

wangmengyan95@gmail.com - Jiaying Liu

liujiaying@pku.edu.cn - Wei Bai

janelle@pku.edu.cn - Zongming Guo

guozongming@pku.edu.cn

Published on ISCAS, May 2013.

Introduction

Super resolution is a long-standing research area in the image processing community. Multi-frame super resolution is a traditional super resolution method, aiming at recovering a high-resolution (HR) image from a series of low-resolution (LR) images. By using redundant information in LR frames, we can reconstruct a high quality HR image with less aliasing and fewer artifacts. The key to conventional multi-frame super resolution algorithm is to know the sub-pixel displacements between different frames. But since it is very difficult to acquire precise motion estimation, conventional multi-frame super resolution algorithms are severely restricted. To solve this problem, Potter et al. [1] proposed NLM super resolution algorithm with no explicit motion estimation. By replacing every pixel with a weighted average of its neighborhood, NLM SR could get better HR image.

On the basis of NLM SR, various improvements were proposed to make similar patches stand out and give them proper weights. Many of these methods used intensity information, i.e., the value of a pixel, as a way to measure the similarity of patches and they assumed the illumination condition was stable in images. However, natural images can be easily affected by illumination. Thus, when there are illumination changes between adjacent frames, those methods can not get good performance due to the loss of similar patches or the inaccuracy of the weight of patches.

Thus we propose an illumination-invariance measurement to calculate the similarity between different patches. Instead of just adjusting contrast between frames, we also select proper candidate patches, which guarantees us to find more similar patches. Due to the relative stability of local structure information in complex illumination conditions, we also take the structure information of a patch into account. We simplify Speed Up Robust Features (SURF)[2] to get local structure information descriptor. Combining structure information and modified intensity information together and giving them suitable weights, we can measure more accurate similarity of patches even in complex illumination conditions.

Implementation

Intensity Similarity Measurement

NLM SR uses SSD (sum of squared error) of two patches to calculate intensity similarity. But because the contrast of an image is likely to be influenced by illumination, this method is not valid when the illumination condition changes between adjacent frames. In order to reduce the influences brought by illumination, contrast of adjacent frames around illumination changes must be adjusted to make them have similar visual appearances. In our work, we first use histogram equalization as a way to adjust the contrast. Then, we discuss the scale to do the adjustment and how to measure the difference between a candidate patch and a reference patch.

Due to the number of pixels in a patch is too small, patch scale adjustment is sensitive to noise and inaccuracy. So we adjust contrast in search window scale considering that it has relatively adequate pixels and is adaptive to local illumination variance. As the foreground and background of an image have different reflection under the same illumination condition, contrast adjustment may even bring serious errors when a search window contains both foreground and background object. To solve this problem, instead of simply using the adjusted patch as the candidate patch to measure the intensity similarity, we do histogram equalization in corresponding search window.

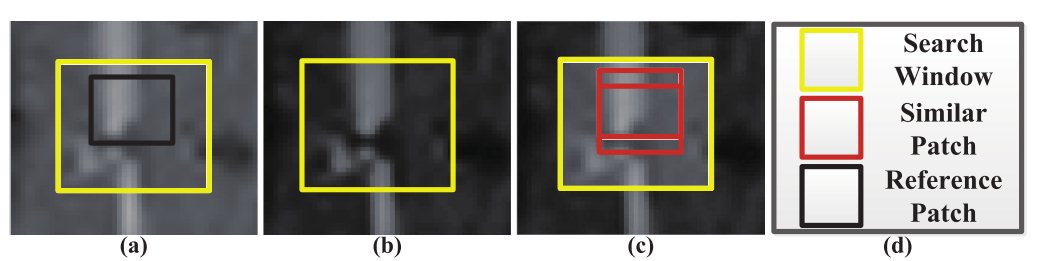

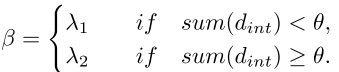

Fig.1 (a) A reference patch in k-th frame. (b) Similar patches in (k−1)-th frame. (c) Similar patches in (k−1)-th frame by using our intensity measurement.

Fig.1 shows that when the illumination condition changes in the k-th frame, few similar candidate patches can be found in the (k−1)-th frames. But after adjusting contrast in search window scale, we can find more intensity similar patches between adjacent frames. Finally, if we choose the adjusted patch as the candidate patch to measure intensity similarity, we do the adjustment in low-resolution image and pick the reference point in NLM based framework from the adjusted low-resolution patch.

Structure Similarity Measurement

In complex illumination condition, the intensity, texture and many other attributes in adjacent frames may vary significantly, but the structure in local areas rarely changes. In our work, we extract local structure to obtain a structure descriptor. Then we calculate the structure similarity between different patches by using this descriptor.

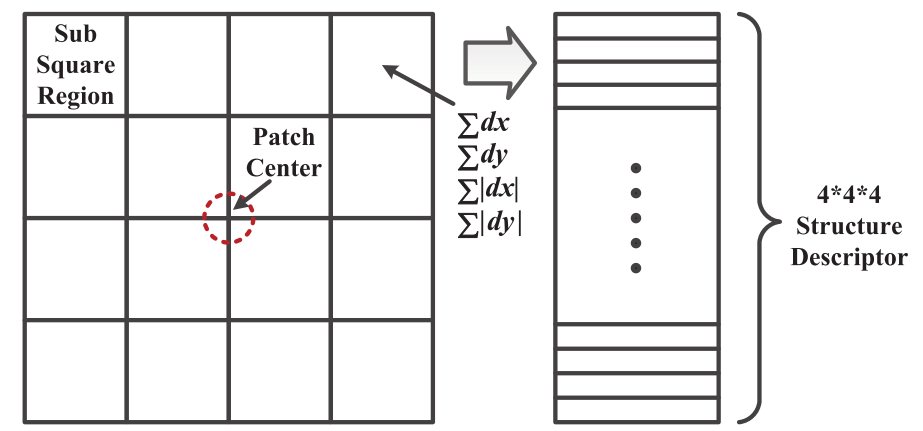

SURF) is simplified to describe a local structure. We show the way to get the structure descriptor of a patch in Fig. 2.

Fig.2 The way to get the structure descriptor of a patch. Red circle is the center of a patch. Squares represent the sub-square-regions. The structure descriptor is the column vector on the right.

The Haar wavelet in different directions can represent important spatial information, so the descriptor contains structure information of a patch. By turning the original descriptor into a unit vector, we can make this descriptor invariant to illumination changes.

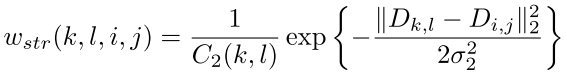

After we get structure descriptor of a patch, the structure similarity of two patches is defined as follows:

Illumination-Invariance Similarity Measurement

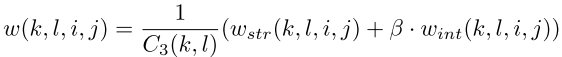

We combine the intensity term and structure term together to get an illumination-invariance measurement to calculate similarity between candidate patch and reference patch. The illumination-invariance measurement is defined as follows:

I-B, contrast adjustment may be invalid We increase the weight of the structure term in the boundary areas to avoid the invalid contrast adjustment in boundaries between background and foreground. We decide the parameter as follows:

Fig.3 (a) A reference patch in k-th frame. (b) (c) Similar patches in (k−1)-th frame by using our intensity measurement. (d) Similar patches by using illumination-invariance measurement.

Fig. 3 shows that by making intensity information more accurate and adding extra structure information, our illumination-invariant measurement gets more reasonable results. The reference patch has a slight slope at the end of zip, as Fig. 3(a) shows. If we just use intensity measurement, we still consider the candidate path without the slope at the end of the zip as a similar patch (Fig. 3(b)). But after we use invariance measurement, we can eliminate this error, as Fig. 3(d) shows.

Experimental Results

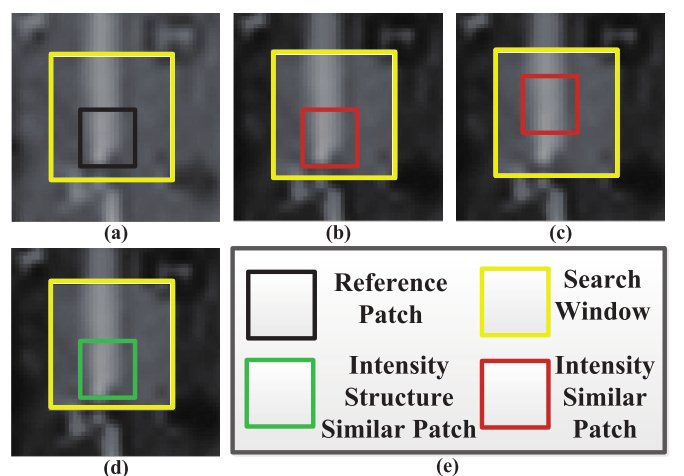

We test our method on Crew sequence which contains local illumination changes caused by flashlight. First, for the purpose of demonstrating the efficiency of our intensity similarity measurement, we combine our intensity similarity measurement with NLM SR to get ISNLM and compare ISNLM to the original NLM. By comparing ISNLM to our proposed illumination-invariance NLM based method (IINLM), the validity of our illumination-invariance similarity measurement can be illustrated.

For the 89th, 90th, 100th, 105th, 109th frames, the SR results of original NLM, ISNLM, IINLM are given in the following Table. For these frames in complex illumination condition, ISNLM improves original NLM by 0.1−0.2dB. IINLM improves original NLM by 0.2−0.4dB. In Fig.4, we compare the visual quality of these three methods for the 100th frame. Since we modify local contrast, compared to original NLM, ISNLM eliminates block artifacts. Because of consideration of structure information, compared to ISNLM, IINLM preserves more details in marginal regions, as can be seen in zoomed images.

| Frame | NLM [1] | ISNLM | IINLM |

| 89th | 29.02 | 29.13 | 29.31 |

| 90th | 28.17 | 28.38 | 28.52 |

| 100th | 27.97 | 28.17 | 28.36 |

| 105th | 27.55 | 27.83 | 27.93 |

| 109th | 28.24 | 28.47 | 28.63 |

Fig. 4 Partial results of three methods in Crew. (a) 100th frame. (b)(e) Original NLM, (c)(f) ISNLM, (d) (g) IINLM.

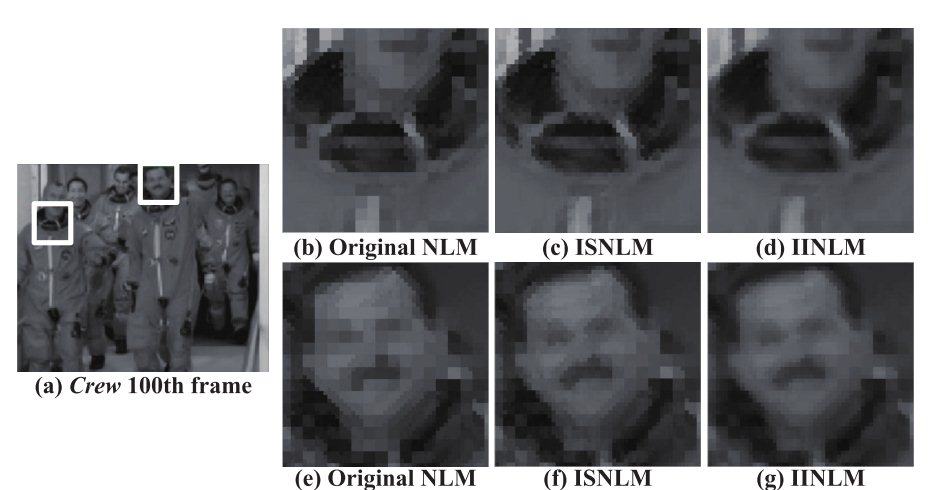

Lastly, we run our method on a real video sequence, Board, captured by Canon PowerShot-A570. We change the location and the intensity of the light source to stimulate a complex illumination condition. The 513th frame and zoomed details are shown in Fig.5. From all the experiments we have conducted,we can make a positive conclusion to our algorithm that illumination-invariant NLM SR is more reliable when processing the sequences in complex illumination condition and performs better than its basement, NLM SR, for obtaining more potential useful information from the candidate frames.

Fig. 5 Partial results of three methods in Board. (a) 513th frame. (b) Original NLM. (c) ISNLM. (d) IINLM.

References

[1] M. Potter, M. Elad, H. Takeda, and P. Milanfar. Generalizing the Nonlocal-Means to Super-Resolution Reconstruction, IEEE Transactions on Image Processing, vol. 19, no. 1, pp. 36-51, January 2009.

[2] D. G. Lowe. Distinctive Image Features from Scale-Invariant Keypoints, International Journal of Computer Vision, vol. 60, no. 2, pp. 91-110, 2004.