Erase or Fill? Deep Joint Recurrent Rain Removal and Reconstruction in Videos

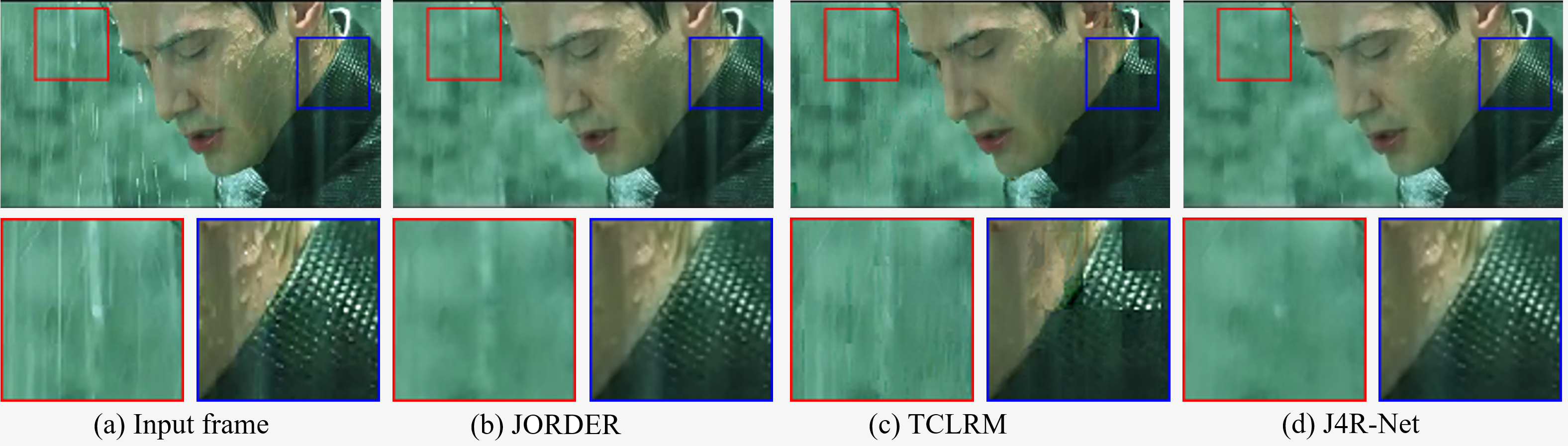

Figure. 1. Demonstration for visual results of different methods on a practical rain video. Compared with JORDER and TCLRM, our method successfully removes most rain streaks and enhances visibility significantly.

Abstract

In this paper, we address the problem of video rain removal by constructing deep recurrent convolutional networks. We visit the rain removal case by considering rain occlusion regions, i.e. the light transmittance of rain streaks is low. Different from additive rain streaks, in such rain occlusion regions, the details of background images are completely lost. Therefore, we propose a hybrid rain model to depict both rain streaks and occlusions. With the wealth of temporal redundancy, we build a Joint Recurrent Rain Removal and Reconstruction Network (J4R-Net) that seamlessly integrates rain degradation classification, spatial texture appearances based rain removal and temporal coherence based background details reconstruction. The rain degradation classification provides a binary map that reveals whether a location is degraded by linear additive streaks or occlusions. With this side information, the gate of the recurrent unit learns to make a trade-off between rain streak removal and background details reconstruction. Extensive experiments on a series of synthetic and real videos with rain streaks verify the superiority of the proposed method over previous state-of-the-art methods.

Framework

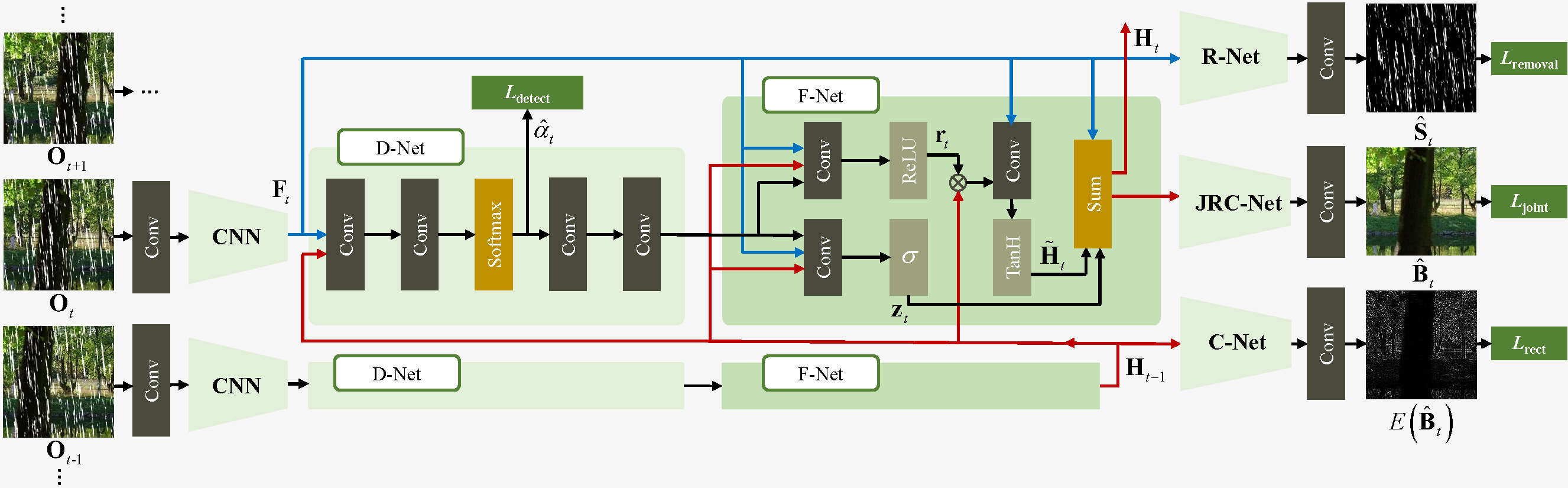

Figure. 2. The framework of Joint Recurrent Rain Removal and Reconstruction Network~(J4R-Net). We first employ a CNN to extract features of \(t\)-th frame \(\mathbf{O}_{t}\). Then, in degradation classification network (D-Net), based on \(\mathbf{F}_{t}\) and the aggregated feature \(\mathbf{H}_{t-1}\) from previous frames, the degradation classification map \(\mathbf{\alpha}_{t}\) is detected. Then, in Fusion Network (\textit{F-Net}), a gated recurrent neural network, based on \(\mathbf{F}_{t}\), \(\mathbf{H}_{t-1}\) and \(\mathbf{\hat{\alpha}}_{t}\), the new aggregated feature \(\mathbf{H}_{t}\) is generated. \(\mathbf{F}_{t}\) is inputted into Removal Network (R-Net) to estimate the rain streak \(\mathbf{\hat{S}}_{t}\). This path makes \(\mathbf{F}_{t}\) separate rain streaks based on spatial appearances. The aggregated feature \(\mathbf{H}_{t-1}\) from previous frames is inputted into reConstruction} Network (C-Net) to predict the details of the current frame \(E(\mathbf{\hat{B}}_{t})\), where \(E(\cdot)\) is a high-pass filter. This path makes \(\mathbf{H}_{t-1}\) capable of filling in structural details in rain occlusion regions of the current frame. The new aggregated feature \(\mathbf{H}_{t}\) combines the information of two paths. It goes through Joint Removal and reConstruction Network~(JRC-Net) to estimate the background image \(\mathbf{\hat{B}}_{t}\), which is the final output of J4R-Net.

Results

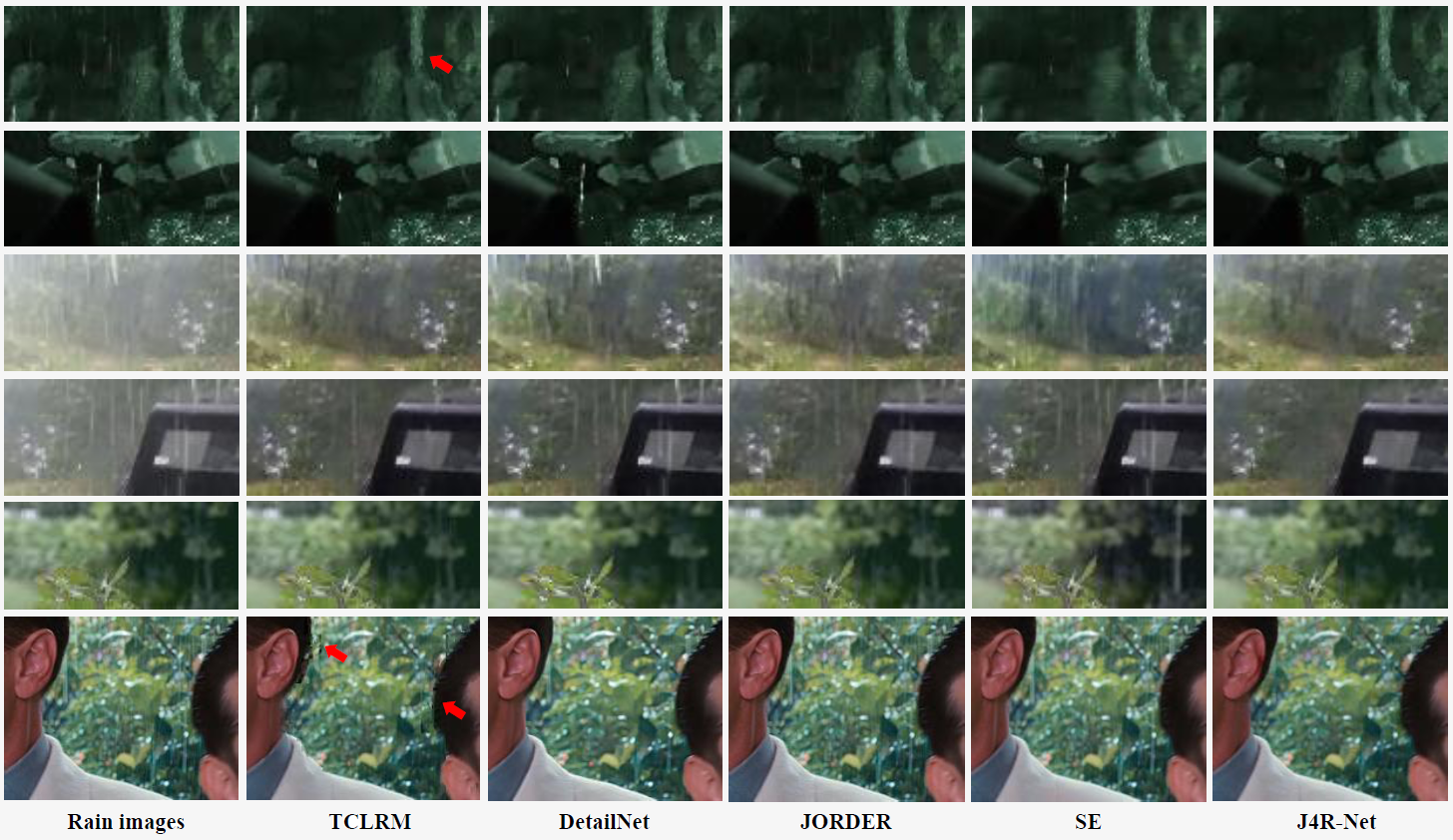

Figure. 3. Results of different methods on practical images.

Download

Citation

@article{Jia2018RainRemoval, title={Erase or Fill? Deep Joint Recurrent Rain Removal and Reconstruction in Videos}, author={Jiaying Liu, Wenhan Yang, Shuai Yang, and Zongming Guo}, journal={IEEE Conference on Computer Vision and Pattern Recognition }, year={2018}, }

Reference

[1] J. H. Kim, J. Y. Sim, and C. S. Kim. Video deraining and desnowing using temporal correlation and lowrank matrix completion. IEEE Trans. on Image Processing, 24(9):2658–2670, Sept 2015.

[2] X. Fu, J. Huang, D. Zeng, Y. Huang, X. Ding, and J. Paisley. Removing rain from single images via a deep detail network. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition, July 2017.

[3] W. Yang, R. T. Tan, J. Feng, J. Liu, Z. Guo, and S. Yan. Deep joint rain detection and removal from a single image. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition, July 2017.

[4] W. Wei, L. Yi, Q. Xie, Q. Zhao, D. Meng, and Z. Xu. Should we encode rain streaks in video as deterministic or stochastic? In Proc. IEEE Int’l Conf. Computer Vision, Oct 2017.

[5] Y. Li, R. T. Tan, X. Guo, J. Lu, and M. S. Brown. Rain streak removal using layer priors. In Proc. IEEE Int’l Conf. Computer Vision and Pattern Recognition, pages 2736–2744, 2016.

[6] Y. Luo, Y. Xu, and H. Ji. Removing rain from a single image via discriminative sparse coding. In Proc. IEEE Int’l Conf. Computer Vision, pages 3397–3405, 2015.