Introduction

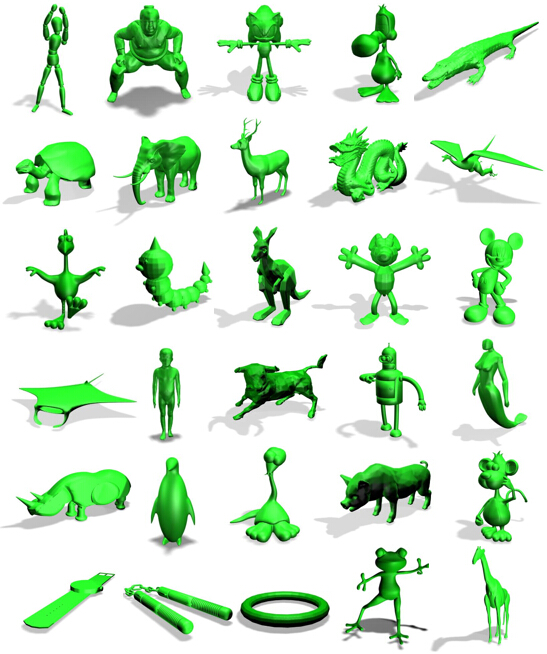

As shown in the figure, non-rigid 3D objects are commonly seen in our surrounding. Therefore, how to efficiently and accurately compare non-rigid 3D shapes is of great significance in practice. However, the non-rigid 3D models database of SHREC’11 Track contains inadequate categories of non-rigid shapes, which are not diversified enough in both texture and topological structure. Many state-of-the-art non-rigid shape retrieval methods achieve an extremely high accuracy rate on this benchmark. It is thereby difficult to distinguish between good methods and demonstrate improvement in new proposed approaches. Therefore, we organize this track to promote the development of non-rigid 3D shape retrieval by expanding the original non-rigid 3D shapes of SHREC’11 in three directions: quantity, category and complexity.

The objective of this track is to evaluate the performance of 3D shape retrieval approaches on a large-scale database of non-rigid 3D watertight meshes generated by our group.

Task description

The task is to evaluate the dissimilarity between every two objects in the database and then output the dissimilarity matrix.

Data set

The former database used in SHREC’11 Track contains 600 watertight triangle meshes that are derived from 30 original models. Each of the 30 models is use to generate 19 deformed versions of the model by articulating around its joints in different ways. Following the same steps, we acquire another 30 models from 3D Warehouse provided by Google, which are more variant in texture and topological structure. Then we obtain 19 deformed versions of each of the 30 new models in the same way as before. Finally we have a new database consisting of 1200 watertight triangle meshes of *(the number will be available after the final results are announced) categories. The models are represented as watertight triangle meshes and the file format is selected as the ASCII Object File Format (*.off).

(Note that: Some of these models we recreated and modified with permission are originally from several publicly available databases: such as McGill database, TOSCA shapes, Princeton Shape Benchmark, etc.)

Evaluation Methodology

We will employ the following evaluation measures: Precision-Recall curve; E-Measure; Discounted Cumulative Gain; Nearest Neighbor, First-Tier (Tier1) and Second-Tier (Tier2).

Procedure

The following list is a step-by-step description of the activities:

- The participants must register by sending a message to SHREC@pku.edu.cn. Early registration is encouraged, so that we get an impression of the number of participants at an early stage.

- The test database will be made available via this website. Participants should also test their methods on the database used in SHREC 2011 Non-rigid track and submit their results to get the password to access the new database.

- Participants will submit the dissimilarity matrix (also named as distance matrix) for the test database. Upto 5 matrixs per group may be submitted, resulting from different runs. Each run may be a different algorithm, or a different parameter setting. More information on the dissimilarity matrix file format

- The evaluations will be done automatically.

- The organization will release the evaluation scores of all the runs.

- The participants write a one page description of their method and the organizers will verify the submitted results.

- The track results are combined into a joint paper, published in the proceedings of the Eurographics Workshop on 3D Object Retrieval.

- The description of the tracks and their results are presented at the Eurographics Workshop on 3D Object Retrieval (May 2-3, 2015).

Schedule

| January 16 | - Call for participation. |

| January 20 | - 10 sample models of the test database will be available on line. |

| January 22 |

(Extended to Jan 25) - Please register before this date.

|

| January 22 |

(Extended to Jan 25) - Distribution of the whole database. Participants can start the retrieval.

|

| January 29 |

(Extended to Jan 31) - Submission of results (dissimilarity matrix) and a one page description of their method(s)).

|

| February 2 |

(Extended to Feb 3) - Distribution of relevance judgments and evaluation scores.

|

| February 3 | - Submission of final descriptions (one page) for the contest proceedings. |

| February 14 | - Track is finished, and results are ready for inclusion in a track report |

| March 30 | - Camera ready track papers submitted for printing |

| May 2-3 | - EUROGRAPHICS Workshop on 3D Object Retrieval including SHREC'15 |

Organizers

Zhouhui Lian , Jun Zhang